The rise of AI chatbots like ChatGPT has transformed the way we interact with technology, allowing us to engage in realistic and sophisticated conversations with machines. However, there are concerns about the potential for these chatbots to contribute to the spread of fake news and misinformation. As AI chatbots become more advanced and widespread, it is important to consider the potential implications of their use and how we can address the issue of fake news and misinformation.

In this blog post, we will explore the ways in which AI chatbots like ChatGPT can contribute to the spread of fake news and misinformation, and consider some strategies for addressing this problem.

Chatbots could be used to spread fake news and misinformation

AI chatbots like ChatGPT are capable of generating highly convincing and sophisticated responses to user queries, which can make it difficult to discern whether the information they provide is accurate or not. This has led to concerns that these chatbots could be used to spread fake news and misinformation, either intentionally or unintentionally.

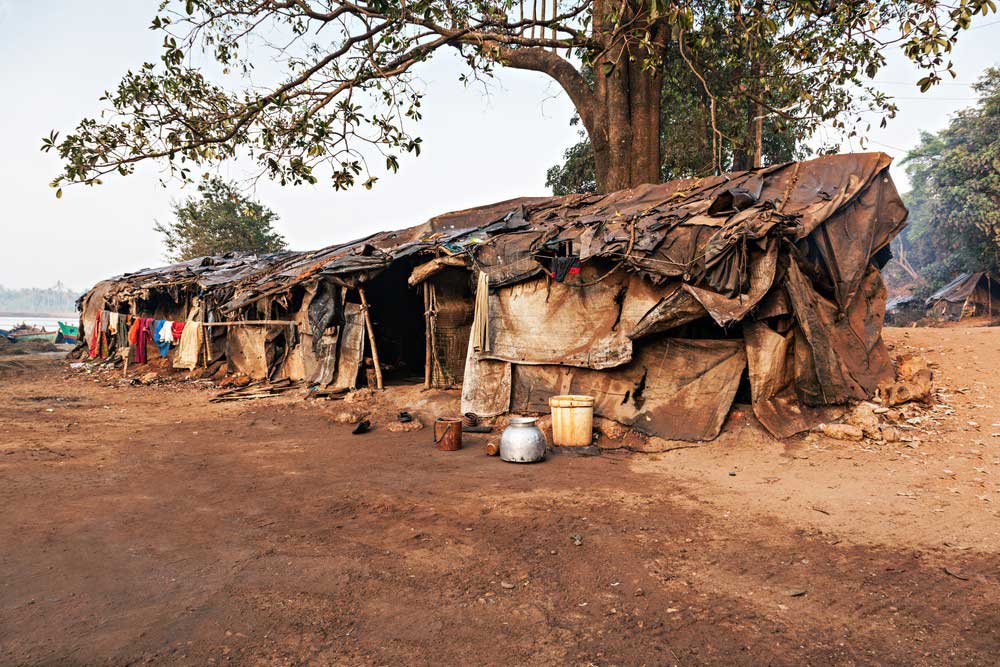

One way in which AI chatbots can contribute to the spread of fake news and misinformation is through the use of biased or unreliable sources of information. If a chatbot is programmed to rely on certain sources of information, or is trained on biased datasets, this can lead to the propagation of inaccurate or misleading information. For example, if a chatbot is trained on a dataset that contains biased or inaccurate information about a particular topic, it may inadvertently spread this misinformation to users who interact with it.

Another way in which AI chatbots can contribute to the spread of fake news and misinformation is through the use of language models that are programmed to generate sensational or attention-grabbing headlines. These headlines may not necessarily reflect the content of the article or the accuracy of the information contained within it, but can still be effective in spreading misinformation and driving traffic to certain websites or platforms.

How can this be addressed?

To address the issue of fake news and misinformation spread by AI chatbots, there are several strategies that can be employed. One approach is to focus on improving the quality of the datasets used to train these chatbots, to ensure that they are not biased or based on unreliable sources of information. This can involve using diverse sources of information, fact-checking data, and implementing measures to identify and remove sources of misinformation.

Another strategy is to develop algorithms and tools that are capable of detecting and flagging misinformation spread by AI chatbots. These tools could analyze the language and tone used by chatbots, as well as the sources of information they rely on, to identify potential cases of fake news or misinformation. Once detected, these cases could be flagged for review by human moderators or fact-checkers, who could then take appropriate action to address the issue.

Conclusion

The rise of AI chatbots like ChatGPT has transformed the way we interact with technology, but also raises concerns about the potential for these chatbots to contribute to the spread of fake news and misinformation. While there are no simple solutions to this problem, there are several strategies that can be employed to address the issue, including improving the quality of the datasets used to train chatbots, developing algorithms and tools to detect and flag misinformation, and implementing measures to identify and remove sources of fake news and misinformation. As AI chatbots continue to evolve and become more advanced, it will be important to remain vigilant in our efforts to address the issue of fake news and misinformation, and to ensure that these technologies are used in ways that are responsible and ethical.

dqhq8t

lgx1be

oefxlj